Amazon Bedrock: Generative AI Without Training Models

Introduction

In recent years, generative AI has reshaped the technology landscape.

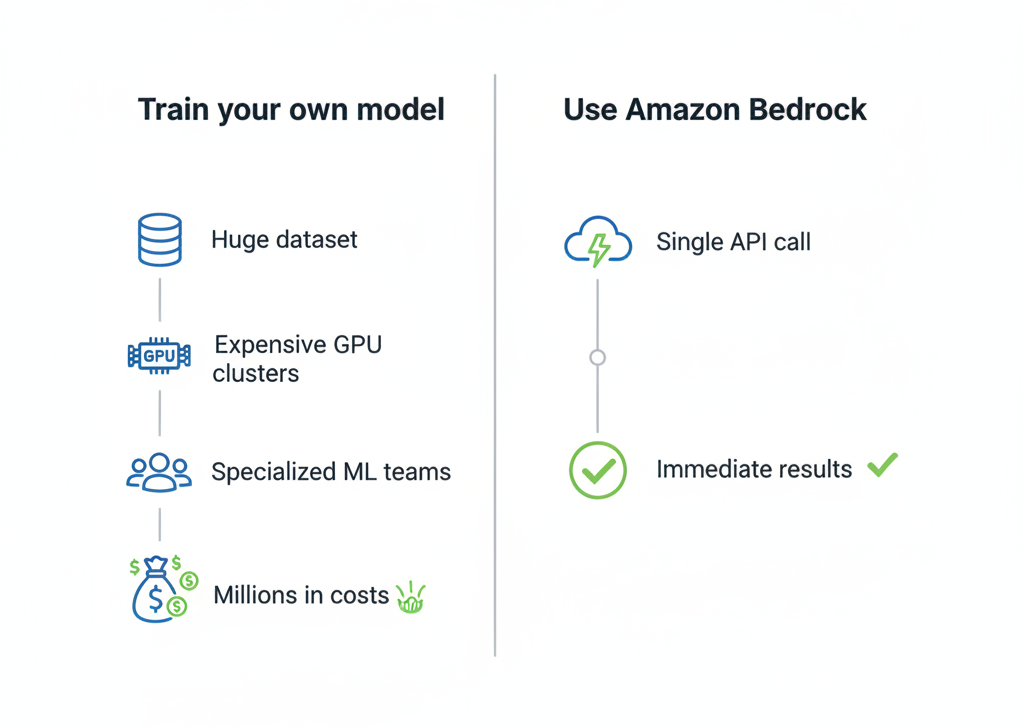

Models like GPT, Claude, and Llama have shown tremendous potential—but building a model of this kind from scratch is simply not realistic for most companies:

- It requires massive amounts of data and highly specialized infrastructure (GPUs, distributed clusters).

- Costs can reach millions of dollars.

- Ongoing maintenance and updates demand highly skilled teams dedicated full time.

When people think about AI in the cloud, they picture servers packed with GPUs, distributed clusters, and teams of data scientists working around the clock.

The truth? That scenario exists… but only for a handful of big tech companies.

For most organizations, building a model from scratch is too expensive, too complex, and too risky.

So what’s the alternative?

AWS’s answer is Amazon Bedrock: a service that promises to make generative AI accessible to anyone—no need to build models, no need to maintain infrastructure, no need to become a machine learning expert.

What is Amazon Bedrock

Amazon Bedrock makes it possible to integrate Foundation Models (FMs) into an application without managing the underlying infrastructure.

Key features include:

- Serverless AI: no GPUs or clusters to configure.

- Multi-model choice: Anthropic, Meta, Mistral, Amazon Titan.

- Pay per use: you only pay for the calls you make.

- AWS compliance: seamless integration with AWS services and security.

Bedrock is Not (Just) a Service: It’s a New Approach

With Bedrock, you don’t get your hands dirty with GPUs or data centers. You make an API call, and it gives you intelligence back.

It’s like having a power outlet for AI: you plug in and get energy without asking what happens inside the power plant.

And here’s the interesting twist: you’re not tied to a single model.

Bedrock is more like a showroom of Foundation Models, each with its own personality, ready to be tested, swapped, and applied depending on your needs.

Model Choice: Which Personality Do You Need?

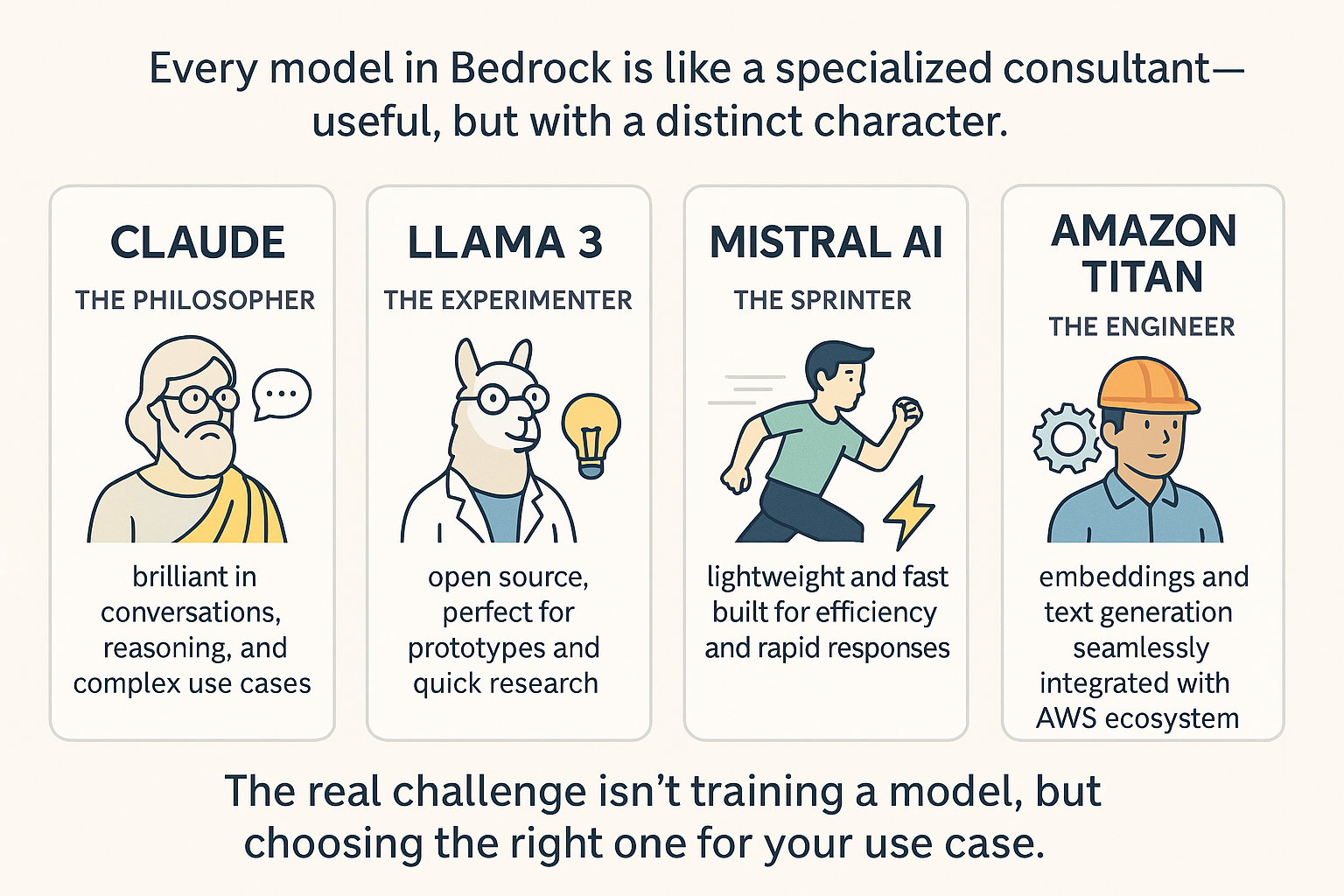

Every model in Bedrock is like a specialized consultant—useful, but with a distinct character.

- Claude (Anthropic) - the philosopher: brilliant in conversations, reasoning, and complex use cases.

- Llama 3 (Meta) - the experimenter: open source, perfect for prototypes and quick research.

- Mistral AI - the sprinter: lightweight and fast, built for efficiency and rapid responses.

- Amazon Titan - the in-house engineer: embeddings and text generation seamlessly integrated with the AWS ecosystem.

The real challenge isn’t training a model, but choosing the right one for your use case.

The AWS Ecosystem as Fertile Ground

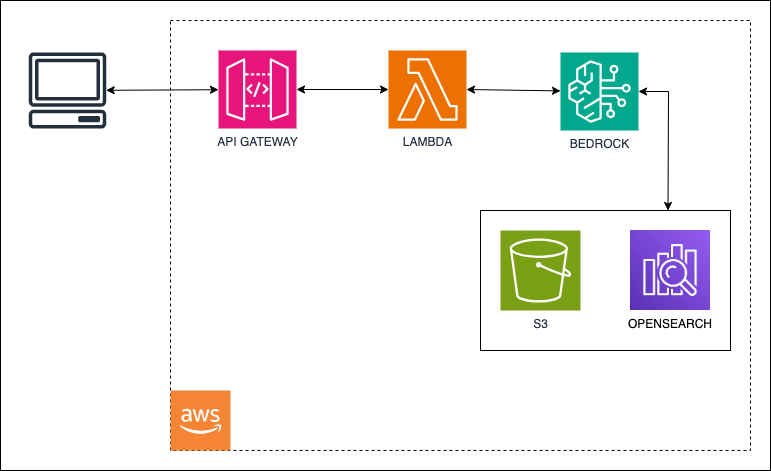

The real power of Bedrock isn’t just the models, but its integration with other AWS services.

Imagine having:

- S3 as your data repository,

- OpenSearch as the engine for intelligent search,

- Lambda orchestrating requests and responses,

- API Gateway exposing everything to the outside world.

And suddenly Bedrock becomes the AI engine at the core of a complete architecture.

A Concrete Example: The App That Knows Your Documents

Let’s take a real example.

A company wants an internal assistant that answers employee questions.

How does it work?

- Documents are uploaded to S3.

- They’re indexed with OpenSearch to enable semantic search.

- A Lambda function retrieves the relevant passages.

- The input is enriched and sent to Claude via Bedrock.

- The user receives a clear, contextual, and—most importantly—data-based answer.

Here’s a code snippet (Node.js with AWS SDK):

import { BedrockRuntimeClient, InvokeModelCommand } from "@aws-sdk/client-bedrock-runtime";

const client = new BedrockRuntimeClient({ region: "us-east-1" });

async function askClaude(question: string) {

const command = new InvokeModelCommand({

modelId: "anthropic.claude-v2",

body: JSON.stringify({

prompt: question,

max_tokens_to_sample: 300,

}),

contentType: "application/json",

accept: "application/json",

});

const response = await client.send(command);

return JSON.parse(new TextDecoder().decode(response.body)).completion;

}

askClaude("What is Amazon Bedrock?").then(console.log);Other Real-World Use Cases

Bedrock is not just theory—it shines in everyday scenarios.

Think about document summarization: instead of spending hours scanning lengthy reports or contracts, Bedrock can condense them into a digestible overview in seconds.

Or consider sentiment analysis. A company drowning in customer feedback—support tickets, reviews, survey responses—can finally make sense of the emotional pulse behind the words. Suddenly, trends that were invisible in raw text become actionable insights.

Generative tasks are another sweet spot. Marketing teams use Bedrock for content creation, from product descriptions to campaign copy, cutting down what used to be days of work into minutes. And for engineering teams, Bedrock even serves as a code assistant, helping debug or suggest snippets during CI/CD workflows.

Bedrock vs the Competition

Of course, Bedrock doesn’t live in a vacuum. The question many teams ask is: why choose Bedrock over OpenAI, Azure, or Google?

OpenAI’s API has the advantage of cutting-edge GPT models and a huge developer community, but it lacks deep cloud-native integration.

Azure OpenAI is the natural choice for organizations already committed to the Microsoft ecosystem, seamlessly tying into Teams and Office 365.

Google Vertex AI is powerful for MLOps and complex data pipelines, offering advanced tooling for data-centric teams.

Bedrock, meanwhile, plays to AWS’s biggest strength: serverless, scalable integration with its vast ecosystem. For companies already living in AWS, the ability to test multiple models (Claude, Llama, Titan, Mistral) inside a unified framework is often the decisive factor.

But What Are the Limits?

Bedrock is powerful, but it’s not a universal magic wand.

- Variable costs: some models (like Claude) are more expensive than others.

- Latency: for certain mission-critical applications, responses may not be immediate.

- Lock-in: you are inside the AWS ecosystem, with all its pros and cons.

- Privacy: be careful with what you send in prompts—clear governance is required.

Conclusion

Amazon Bedrock isn’t just a service—it’s a change in perspective.

From “How do I train a model?” to “Which model should I choose today?”.

That’s the revolution: AI no longer as a colossal million-dollar project, but as an immediate, integrated, ready-to-use service.

The real question is not whether you’ll use AI, but which Bedrock model you’ll try first.