Why Kubernetes? From VMs to containers, in a few minutes

Introduction

The world is changing and has changed a lot compared to the past. Once upon a time not all of us used the Internet, not everyone had a PC at home, and the Web had very few sites. Over the last 30 years there has been enormous evolution, and computing has adapted to meet, day after day, the increase in the pool of users (clients) who use applications.

It started between the ’90s and the early 2000s, when only a few people had a computer at home, up to the advent of smartphones, which allow anyone to access the Internet in a few seconds: this led to massive use of applications and a wide spread of the Web.

So, if before an application handled a load of 1,000 requests per second, with the advent of social networks — which revolutionized computing — it had to adapt to extremely high loads: millions of requests in a few seconds.

Today there is a lot of talk about tools like Kubernetes. But what exactly is it, what does it do, and what problems does it solve?

Before talking about Kubernetes we need to explain what containers are and what virtualization is.

Virtualization

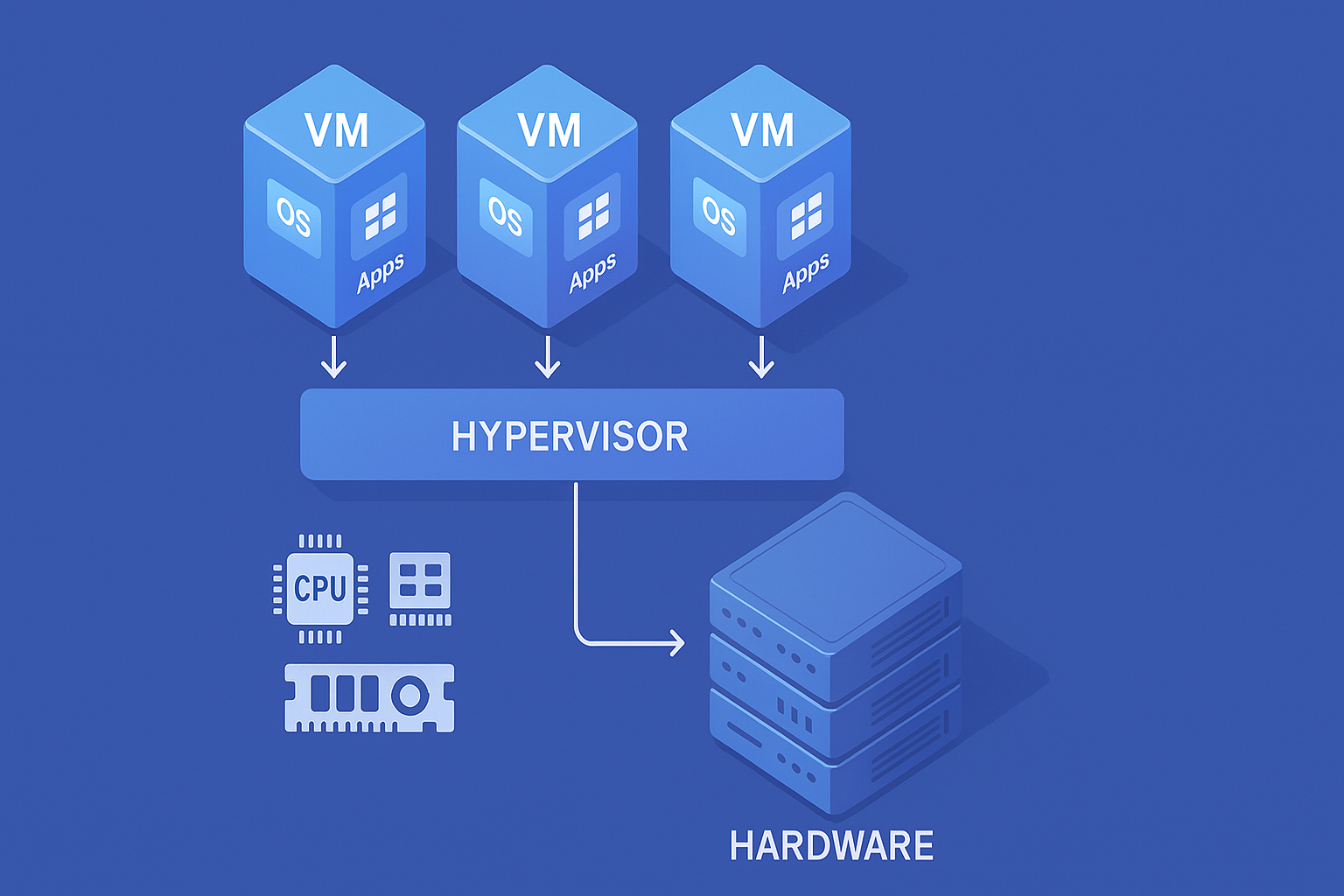

Virtualization is a technology that allows multiple operating systems (OS) to run on a single physical machine through software called a hypervisor. This makes it possible to optimize hardware resources by running multiple instances of operating systems (the so-called Guest OS) on a single physical host.

This approach, years ago, worked just fine; however, it has always had some major limitations, for example:

- Each VM has its own Guest OS, which consumes CPU, RAM, and storage.

- The more VMs run on a server, the more hardware resources are needed.

- This increases operating costs and inefficient use of hardware.

- Each VM has a separate Guest OS, even if it runs the same applications.

- The hypervisor has to manage multiple Guest OS, increasing the workload.

- More VMs mean more updates, security patches, and maintenance.

- Managing updates on each Guest OS increases the risk of errors.

Given the limits of virtualization, in recent times we have moved to containerization. Let’s see what it is and how it can help us.

Containerization

Containerization is a method of operating-system-level virtualization that allows multiple applications to run in isolated environments called containers. Unlike virtual machines, containers do not require a separate Guest OS, reducing resource consumption and improving efficiency.

- Each container hosts a single application with the runtime needed to run.

- There are no separate Guest OS for each application: all containers share the same host OS.

- Since there is no Guest OS per application, containers are lighter than VMs.

- It is possible to run more applications on the same hardware with less overhead.

- Containers start in milliseconds, unlike VMs which often take minutes.

- Containers can run on any system that supports Docker or Kubernetes.

- Each container is isolated from the others, so problems or crashes of one container do not affect other applications.

What is Docker?

Docker is a tool that “packages” an application with everything it needs (libraries, configurations) inside a container: a lightweight and portable environment that always runs the same way on any machine. In other words, it is a tool that allows you to run containers.

These containers, however, always start from something: from a definition of what they must contain inside. If we wanted to use object-oriented programming to understand it, we can say that a container is an instance: something that is created starting from the definition of something else. This “something else,” that is, the class, is the image.

Image

An image is a blueprint, that is, the definition of what a container must contain. In the image we specify what it does and what it includes inside, because — as we said — a container is nothing more than an execution, an instance. We can have n of them starting from the same image.

Perfect! It all sounds great: with Docker and containers we have achieved a major milestone. But if everything is so good, why do people use Kubernetes and what does Kubernetes have to do with all this?

Before answering, we need to explain what a microservices architecture is.

Microservices (in brief)

As we were saying, the world has changed: today applications are huge and do a lot of things. The need therefore arose to break them down into independent blocks, applying the divide et impera principle.

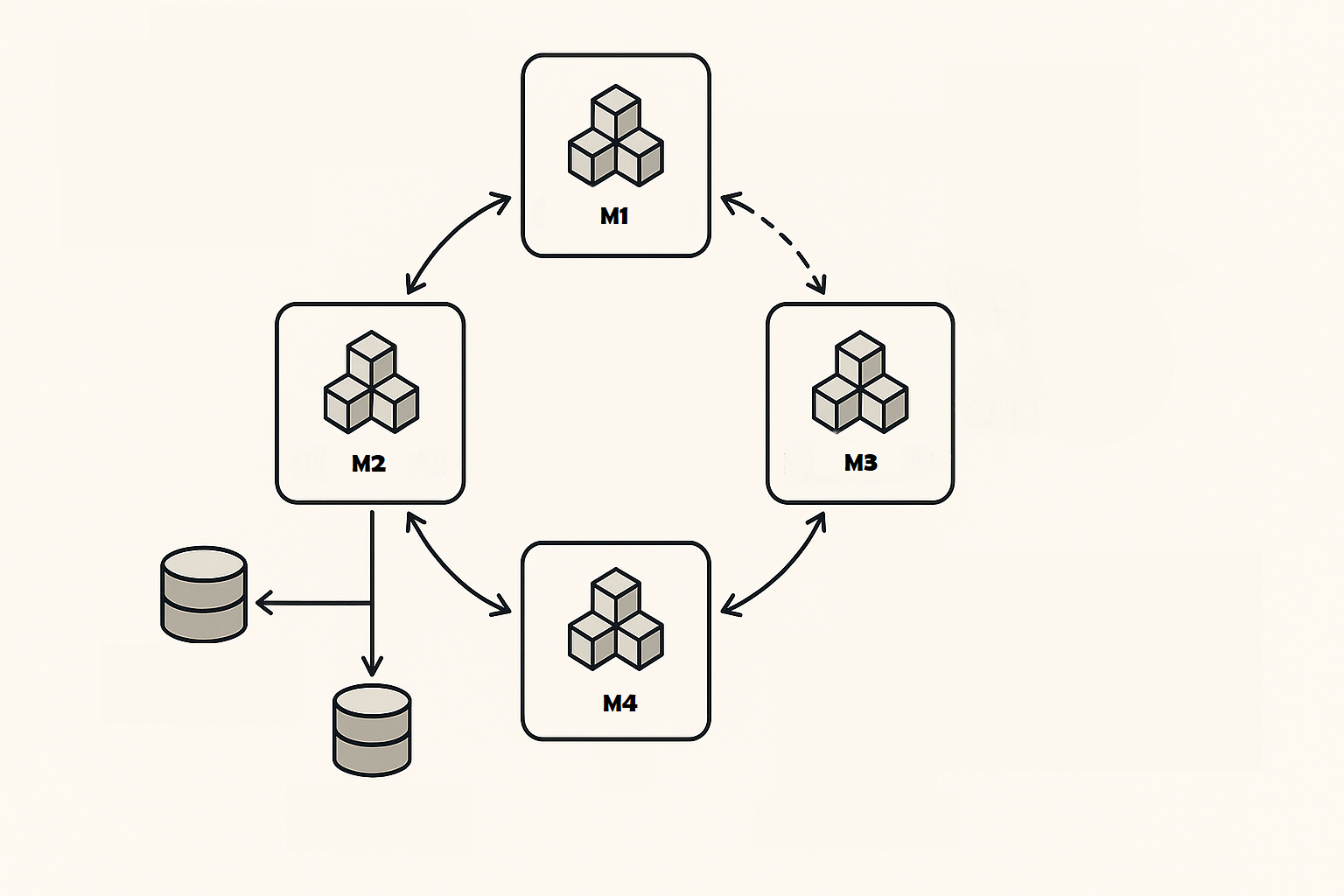

A microservices application is a system composed of multiple small and independent services, each with a well-defined responsibility (e.g. “orders,” “payments,” “catalog”).

These services:

- communicate with each other via APIs or messages,

- can be developed, released, and scaled independently,

- can use different technologies (languages, databases) and have dedicated teams.

The goal is to increase agility, scalability, and resilience compared to the monolithic model, while accepting greater integration and operational complexity.

A microservice is nothing more than a standalone application: an independent block that communicates with other independent blocks.

Different microservices can be written in different programming languages, so as to use the language best suited to the use case.

Now that we know what a microservice is, what a container is, and what Docker is, we can finally answer the question: what is Kubernetes and what is it for?

Kubernetes

Kubernetes is an open-source platform that orchestrates containers: it deploys, scales, updates, and keeps your containerized applications healthy automatically across clusters of machines.

In practice you declare the desired state (how many replicas, which images, resources, network), and Kubernetes takes care of the how: scheduling pods on nodes, load-balancing traffic, restarting failed containers, controlled rollout/rollback, management of configurations and secrets.

The fact that Kubernetes is a container orchestrator — what does that mean?

We said that a microservice is an independent application that communicates with other independent applications. An image is the definition of a container (that is, everything the container contains), and a container is an isolated environment in which everything needed to run an application runs.

So, each application can be seen as a container, and Kubernetes is the way to orchestrate these containers in the best possible way.

Let’s imagine, for example, that a microservice is used a great deal: we might want to automatically increase the number of containers for that microservice, so as to distribute the number of requests. This is called horizontal scalability, namely increasing the number of instances (containers) to distribute the traffic.

Or let’s imagine that a container crashes. Nothing is infallible: it would be useful to have an automatic mechanism such that, every time a container crashes, it is recreated automatically. Kubernetes does all this automatically. We define how many instances of a container we want, and it will be Kubernetes’ job to ensure they are always running.

These are just some of the many advantages of Kubernetes: a truly effective tool that helps manage microservices applications in the enterprise world.

Conclusion

Over the years, computing has evolved tremendously, and it will continue to do so. Everything starts from a need: serving an ever-growing user base while keeping services available and reliable.

Kubernetes is a tool that helps us a lot. Like anything, however, it needs to be understood and used in context: it is not the solution to every problem. When adopted appropriately, it can be an extra asset to deliver working, high-performance applications to users while at the same time reducing the overhead for those who build them.

This article was written precisely to explain, in simple terms, what Kubernetes is, how we arrived here over the years, and why it is the result of a continuous evolution driven by needs that emerge every day.