When AI Truly Enters Systems

Why the value is not in artificial intelligence, but in how we integrate it

AI always enters this way

At first, it’s almost trivial.

Someone tries something new—maybe out of curiosity, maybe to respond to a concrete request.

Within a few days, there’s something that works: it replies, suggests, seems to understand.

It’s not perfect, but it’s good enough to be surprising.

And when it surprises, inevitably, it starts to spread.

Someone actually uses it.

Someone else asks whether it can be integrated “over there as well.”

And without anyone making a formal decision, AI stops being a test and becomes part of everyday conversation.

That’s the moment it truly enters the company.

An ordinary scene, in an ordinary room

The scene is simple.

A meeting room. An open laptop. Two or three people sitting at the table.

Someone says:

“The chatbot is working. People are really using it.”

Someone else nods:

“We could integrate it into flow X as well—save some time.”

Then the question comes, almost casually:

“But… how much does it cost us every month?”

Silence.

Not because no one knows how to answer,

but because no one has ever really had to think about it.

Someone tries:

“It depends on usage.”

Another adds:

“It depends on the model.”

Someone else:

“Well, if it grows, we’ll see.”

That’s an acceptable answer when you’re talking about an experiment.

It isn’t anymore when something has entered your processes.

And that’s when it becomes clear that the problem isn’t AI.

The problem is how it got in.

When AI stops being “the intelligent part”

In production, AI changes its nature.

It’s no longer the brilliant part of the project—the one that shines in demos.

It becomes a dependency. One of many.

It has failure modes.

It has variable costs.

It has behaviors that don’t always repeat.

And above all, it starts generating questions that have nothing “AI” about them.

Who monitors it?

How do we know if it’s degrading?

What happens if tomorrow we need to replace it?

These are system questions.

Not lab questions.

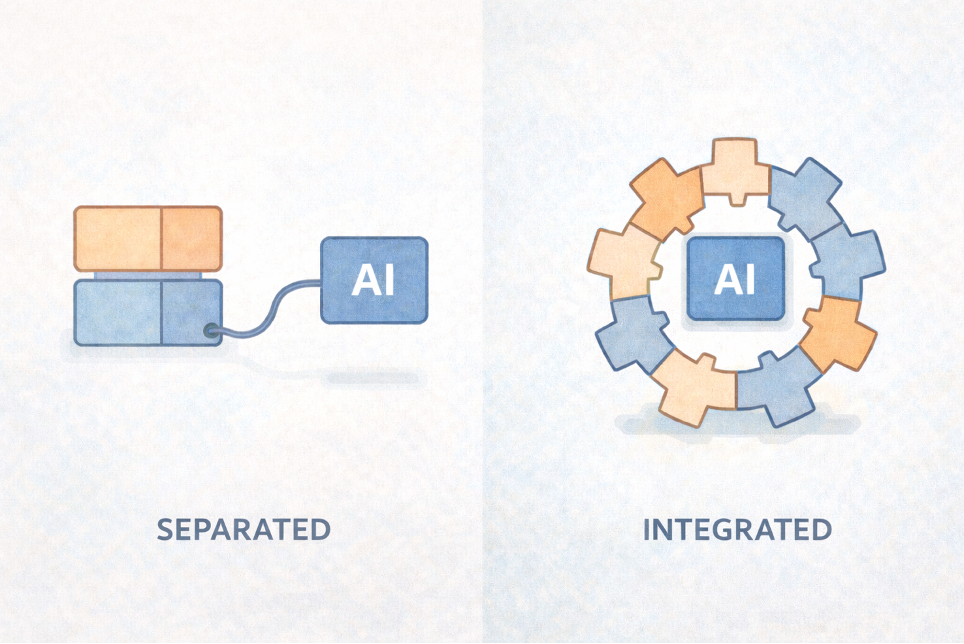

The instinctive reaction (and almost always the wrong one)

At this point, almost always, the reaction is the same.

“Let’s put a layer in front of it.”

“Let’s isolate it.”

“Let’s build a dedicated service.”

It sounds like a good idea.

Keep AI separate. Special. Protected.

But complex systems don’t like exceptions.

They tolerate them for a while. Then they make you pay for them.

Side-by-side is easy. Integration is hard.

And that’s exactly where you see the difference between a solution that works today and one that still holds tomorrow.

When the way you look at the problem changes

It almost always happens without an official meeting.

By working on it, someone realizes that AI isn’t actually that different from other complex capabilities already present in systems.

It’s just more unstable. More opaque. More expensive.

And so the question changes.

It’s no longer:

“How do we make this AI more powerful?”

It becomes:

“How do we make it fit into the system without breaking it?”

And that’s where a simple, uncomfortable realization emerges:

AI is not a special project.

It’s a capability.

And like all capabilities:

either you govern it, or it becomes debt.

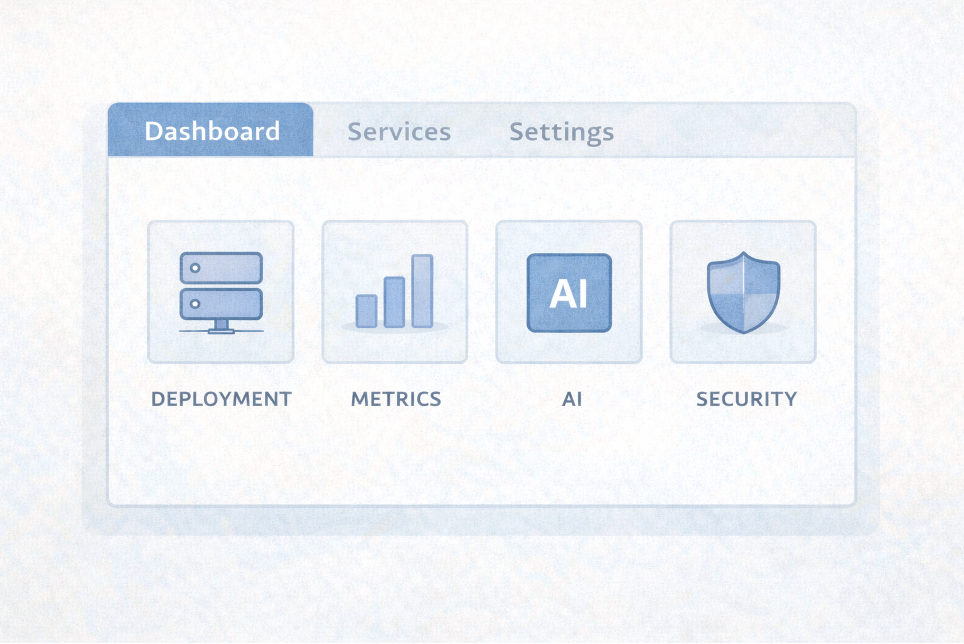

Normalizing instead of glorifying

Truly integrating AI doesn’t mean making it smarter.

It means making it normal.

Normal like the way we deploy software.

Normal like the way we monitor a service.

Normal like the way we manage security and costs.

When AI lives in the same ecosystem as the applications that use it,

it stops being a foreign body.

It becomes part of the system.

With the same rules.

With the same responsibilities.

And above all, it becomes controllable.

When value stops making noise

At that point, the wow effect disappears.

And that’s fine.

There’s no longer the feeling of holding something magical in your hands.

There’s something much better: predictability.

The ability to change without rewriting everything.

The ability to turn it off without bringing the system down.

The ability to understand what’s happening when something goes wrong.

And that’s when something becomes clear—something that initially feels counterintuitive:

The value is not in AI.

It’s in how it has been integrated.

A story we’ve already seen

This story isn’t new.

It happened with the cloud.

With serverless.

With microservices.

Every time, the technology seemed to be the value.

Every time, over time, it became clear that the value was in the approach.

Frameworks come and go.

Architectural choices remain.

The only decision that matters

AI will enter systems anyway.

Not because we decide it should, but because it’s useful.

The real difference will be made by those who decided early how it would enter.

With what rules.

With what level of control.

With what long-term vision.

It’s not a technological choice.

It’s a maturity choice.

And when the hype has faded, what will remain won’t be the AI we chose,

but how we decided to let it enter our systems.